Black Swift Technologies (BST) has released the following article about technology developed by the company that will allow unmanned aerial systems (UAS) to predict critical failure and use artificial intelligence and machine vision to recognise obstacles and select a safe landing area.

Artificial intelligence (AI) can bring a predictive measure to unmanned aircraft systems, coupling failure prediction with a vision system that could recognize objects on the ground that need to be avoided in the event of a forced landing due to system or flight failure.

Using AI neural networks, Black Swift Technology has been developing technology that would enable a UAS to autonomously locate a safe landing site – avoiding people, vehicles, structures, and terrain obstacles, in a hierarchal order. The technology, named SwiftSTL (Swift Safe To Land), essentially uses AI to determine where the safest location is to land the aircraft in the event of a flight emergency such as engine failure.

The AI system makes predictions based on variables such as the aircraft’s current position, energy state, altitude, and wind speed, and then invokes an algorithm to steer the plane to a safe landing site. The AI could even predict failures before they happen so that maintenance can be performed to avoid the potential failure and subsequent crash.

Algorithms and Heuristics

Almost anything in the UAV’s environment can be classified as a tensor or a sensor input, and these sensors can capture real-time video or still images. With this information, the AI algorithm can detect and classify the images. SwiftSTL then utilizes supplied heuristics defining what to avoid (people, vehicles, building structures, and terrain obstacles) while determining where to safely land. Reliable object detection is crucial, as when the aircraft identifies a landing area, it can look for objects in and around the landing site in near real-time that were not visible from higher altitudes, such as people or vehicles.

How it Works

SwiftSTL technology integrates state-of-the-art machine learning algorithms, artificial intelligence, and cutting-edge onboard processors to capture and segment images, at altitude, enabling a UAS to autonomously identify a safe landing area in the event of a catastrophe.

Black Swift’s technology processes high-resolution images quickly and efficiently onboard the UAS to enable the identification of objects and terrain that must be avoided during a safe emergency landing. The technology uses a machine vision technique known as semantic segmentation, which allows objects to be classified at the pixel level by assigning each pixel to a class label. It uses heuristics to make sure that the aircraft doesn’t hit people, vehicles, buildings, or structures. Once it finds the ideal landing location, it relies on object detection to finish the landing.

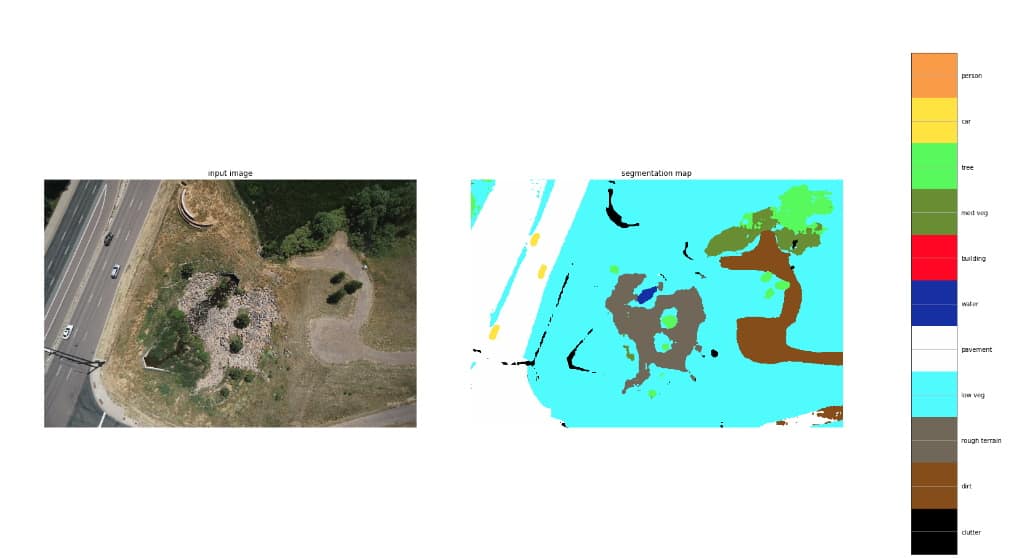

SwiftSTL has demonstrated that it can accurately identify people, cars, trees, buildings, water, etc. These classifications are visible in the color-coded semantic segmentation map generated by the system, seen below.

All of the classifications identified in the vertical segmentation legend on the right-side of the figure are already mapped into the landing heuristics: avoiding people, then avoiding cars, then trees, buildings, and finally rough terrain. Each classification has a specific color coding that is inside the heuristic algorithm and mapped into the training of the AI.

Prevent the Accident Before it Happens

Machine learning is a very powerful tool and can be used for a variety of applications. BST has focused their development on two distinct areas: machine vision for safe landing solutions, and classifiers for preventive maintenance. The machine learning algorithms were developed by Black Swift using AdaBoost, which is a weak classifier. The algorithms are then boosted to provide a stronger classification. With machine learning, SwiftSTL can try to predict whether the aircraft is going to fail. The goal is to classify whether the servos are going to fail, or the propulsion system, batteries, communications, GPS or other subsystem. These prediction algorithms, using the AdaBoost classifier, give users that information before the flight. The goal is to prevent the problem from occurring as a preferred alternative to trying to solve it after.

The AdaBoost algorithms use machine learning to predict when operators need to replace the electric motor or replace components on the aircraft. This preventative maintenance helps ensure that the drone doesn’t get into an emergency state as a result of pre-existing anomalies. In the event that the drone does get into an emergency state, the autonomous branch menus and site selection also running on the UAS are used as the last attempt to try to save the vehicle. Ultimately, AI, with the vision camera system, is then used to actually land the vehicle safely.

“This technology is of value to all commercial drone operators, whether they are delivering packages or medical supplies,” says Jack Elston, Ph.D., CEO of Black Swift Technologies. “Regardless, the drone needs to identify where it is at all times so that when it makes its delivery that it avoids people, vehicles, or structures. The same is true if the UAS needs to make a safe emergency landing.”

Elston notes that the same concepts would be applicable in planetary exploration, such as future missions to Mars. This machine vision technology would enable the operators to know where the device landed, how it landed, and determine the status of the vehicle after it landed.

Related Posts

Rugged UAV Platforms for Civilian Defense Applications

Skyeton Integrates Radiation Surveillance Sensors into Raybird UAS Expands Mission

Schiebel to Establish Dedicated UK Entity Expand Defence Operations